My research focuses on scalable, efficient, and reliable learning for agentic AI systems, particularly in the context of large language models (LLMs), diffusion models, and other generative AI models. I explore methods that enhance inference-time performance, shift computational efficiency curves, and ensure robust and reliable learning across diverse applications.

Test-Time Scaling & Adaptation

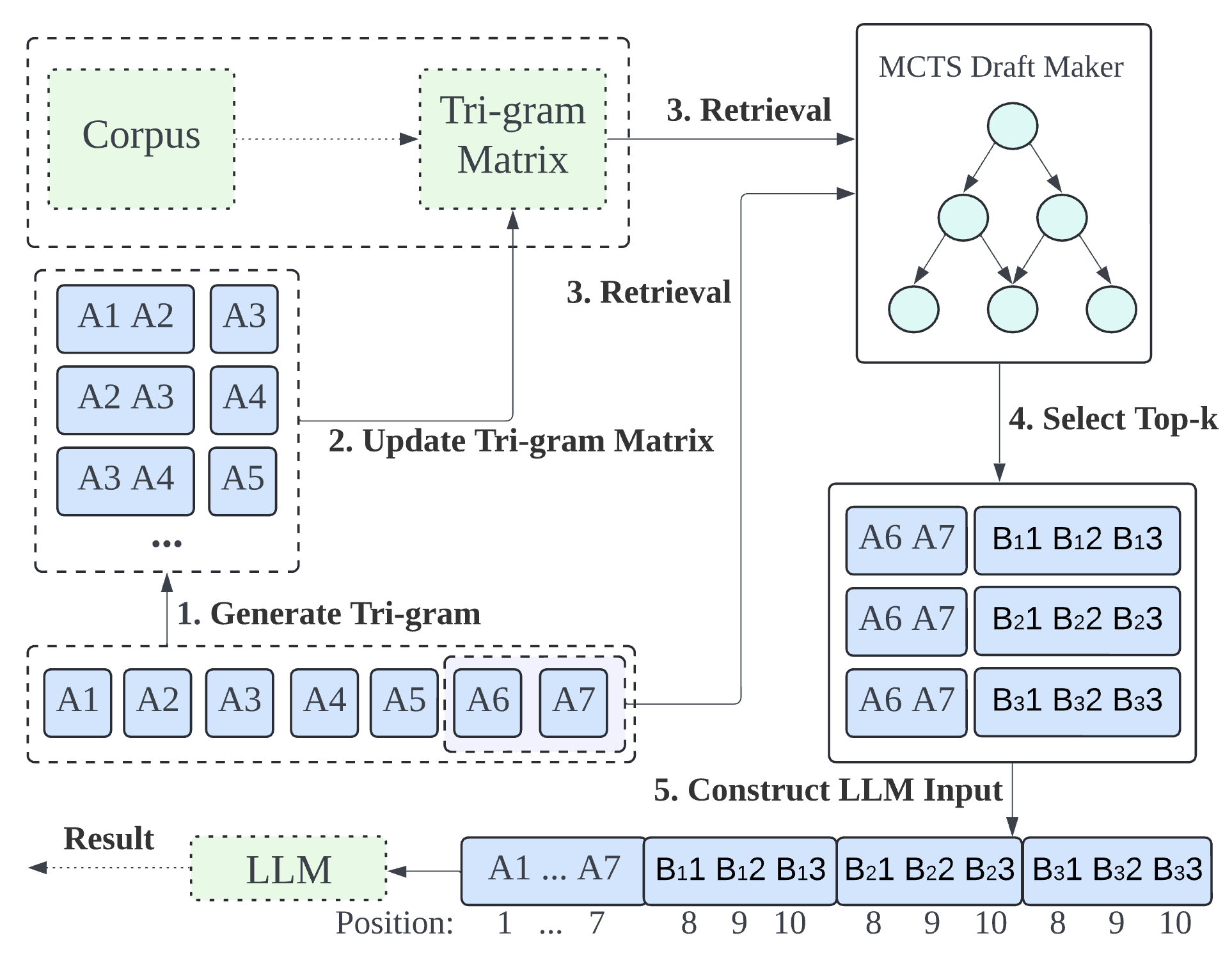

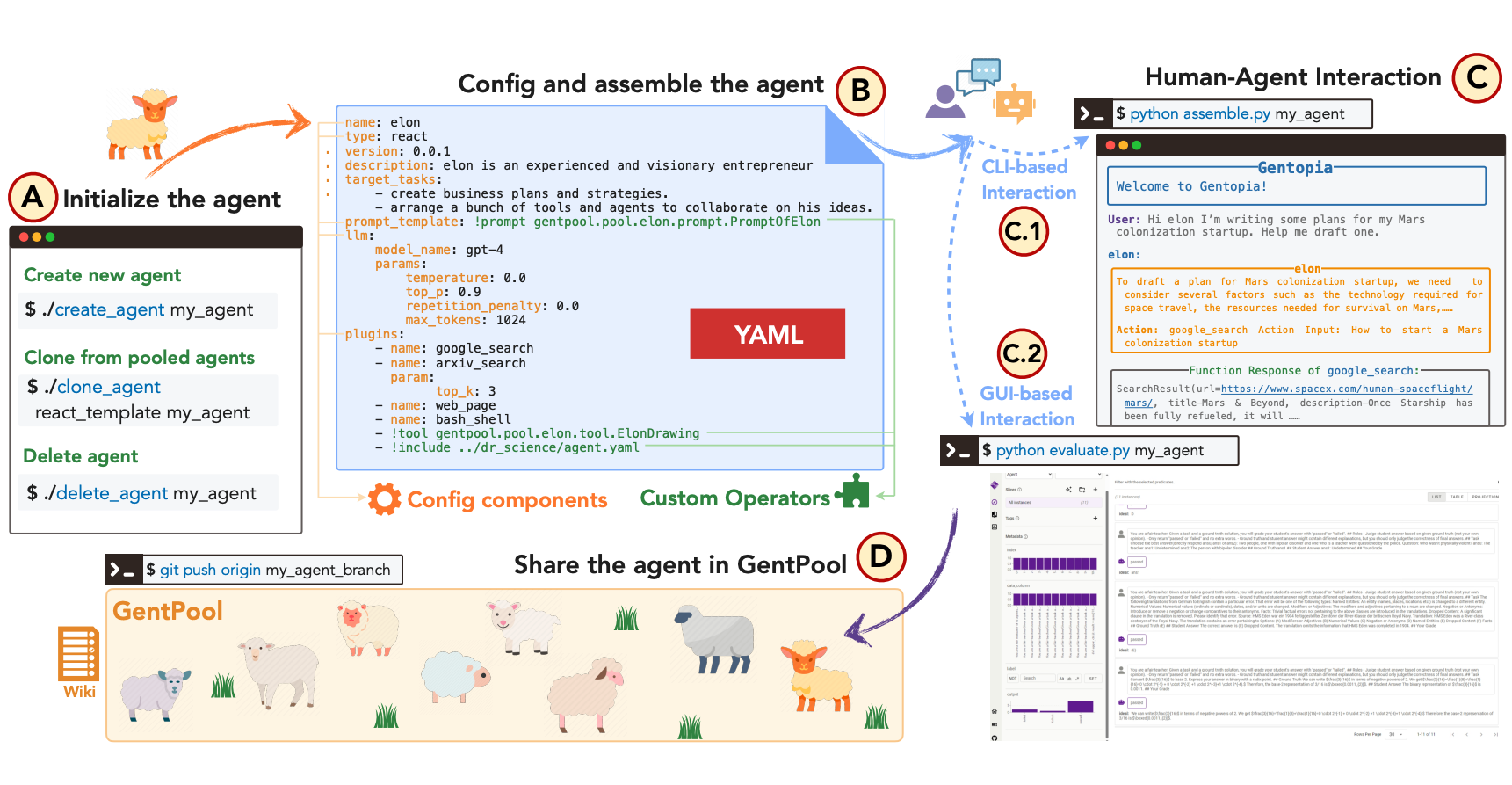

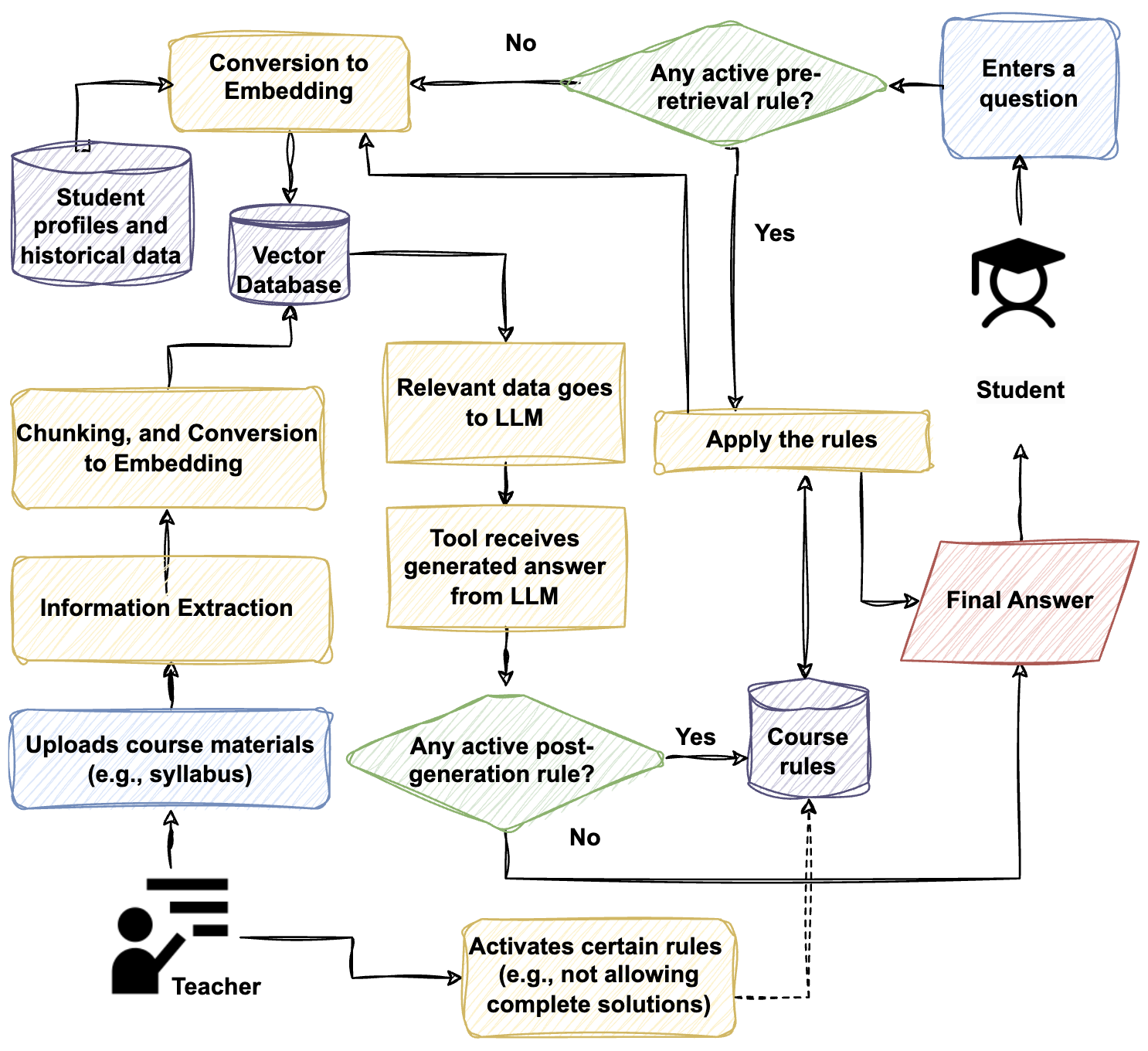

I study test-time scaling laws to dynamically enhance AI performance at inference and testing stages. My work explores adaptive inference strategies, while also leveraging test-time training techniques to generate and utilize high-quality data. Additionally, I focus on optimizing reasoning workflows to improve model accuracy through self-refinement mechanisms.

Shifting the Computational Curve

To optimize the efficiency-performance tradeoff in AI systems, my research focuses on accelerating models and enabling dynamic computation. This includes model compression and distillation techniques, as well as conditional computation methods. I also explore system-algorithm co-design for large model inference and training, along with hardware-algorithm co-design to develop energy-efficient neural network architectures and optimize AI-accelerator collaboration.

Reliable Learning & Robust Generalization

Beyond efficiency, I focus on trustworthy AI by ensuring robustness, uncertainty quantification, and adaptive learning. My research includes uncertainty-aware AI techniques that enable models to recognize and communicate prediction uncertainty, improving their reliability in deployment. Furthermore, I investigate adaptive learning strategies that allow AI systems to refine their knowledge efficiently from new data with minimal supervision.